What is Segmentation in Computer Vision?

Published on: Aug 14, 2024

Written by: Admin

Understanding Segmentation in Computer Vision

Segmentation in computer vision divides an image into distinct regions for easier analysis, crucial for applications like medical imaging, autonomous driving, and image editing.

What is Segmentation in Computer Vision?

Segmentation in computer vision can be broadly classified into two types:

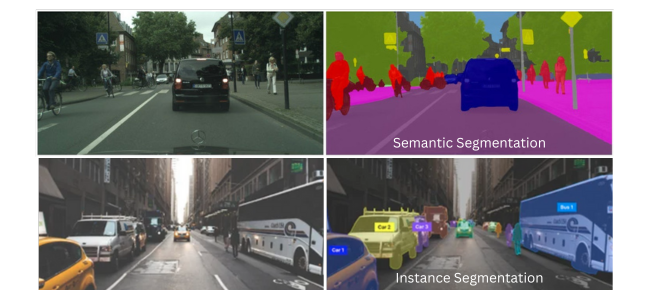

- Semantic Segmentation: This involves labeling each pixel in an image with a class label. For example, in an image of a street scene, all pixels belonging to cars are labeled as "car," all pixels belonging to pedestrians are labeled as "pedestrian," and so on. This type of segmentation does not distinguish between different instances of the same class.

- Instance Segmentation: This not only labels each pixel with a class label but also differentiates between different instances of the same class. For example, each car in an image would be identified as a separate instance with a unique label.

Key Differences Between Segmentation and Detection AI Models

While both segmentation and detection AI models are used to analyze and interpret images, they serve different purposes and employ different methodologies. Here are the key differences between the two:

- Objective:

- Detection: The primary goal of object detection is to identify and locate objects within an image. It involves drawing bounding boxes around objects and classifying them into predefined categories.

- Segmentation: The goal of segmentation is to partition an image into regions corresponding to different objects or parts of objects. It provides pixel-level classification, offering a more detailed understanding of the image.

- Output:

- Detection: The output of an object detection model is a set of bounding boxes and class labels for the detected objects. Each bounding box defines the coordinates of the object's location within the image.

- Segmentation: The output of a segmentation model is a mask that labels each pixel in the image. In semantic segmentation, the mask assigns a class label to each pixel, while in instance segmentation, it also differentiates between instances of the same class.

- Granularity:

- Detection: Provides a coarse-grained analysis of the image by identifying and localizing objects with bounding boxes.

- Segmentation: Offers a fine-grained analysis by providing pixel-level information, enabling a more precise understanding of the image.

- Applications:

- Detection: Commonly used in applications such as autonomous driving (detecting pedestrians, vehicles, traffic signs), surveillance (identifying people and objects), and image retrieval (finding specific objects within images).

- Segmentation: Used in applications like medical imaging (segmenting organs and tumors), image editing (isolating objects for manipulation), and augmented reality (placing virtual objects in real-world scenes).

Example Use Cases

- Autonomous Driving:

- Detection: Identifying and localizing vehicles, pedestrians, traffic lights, and other objects on the road.

- Segmentation: Understanding the scene at a pixel level to differentiate between lanes, road boundaries, and objects, which helps in path planning and navigation.

- Medical Imaging:

- Detection: Identifying the presence of abnormalities such as tumors in medical scans.

- Segmentation: Delineating the exact boundaries of organs, tissues, and abnormalities for accurate diagnosis and treatment planning.

- Image Editing:

- Detection: Identifying objects within an image for basic manipulation.

- Segmentation: Isolating objects or regions for more complex edits such as background replacement or selective adjustments.

Training and Implementation

Both segmentation and detection models rely on deep learning techniques, particularly convolutional neural networks (CNNs). However, their architectures and training processes differ:

- Object Detection Models:

- Architectures: Popular architectures include Faster R-CNN, YOLO (You Only Look Once), and SSD (Single Shot MultiBox Detector).

- Training: Requires labeled datasets with bounding box annotations. The model learns to predict the coordinates of bounding boxes and the class labels of objects within those boxes.

- Segmentation Models:

- Architectures: Common architectures include U-Net, Mask R-CNN, and Fully Convolutional Networks (FCNs).

- Training: Requires labeled datasets with pixel-level annotations. The model learns to classify each pixel in the image, either for semantic segmentation or instance segmentation.

Example: Training a Segmentation Model Using Mask R-CNN

Here’s a simplified example of how to train an instance segmentation model using Mask R-CNN and the PyTorch library:

Setup:

import torch

import torchvision

from torchvision.models.detection import maskrcnn_resnet50_fpn

# Load a pre-trained Mask R-CNN model

model = maskrcnn_resnet50_fpn(pretrained=True)

# Replace the classifier with a new one for our specific number of classes

num_classes = 2 # 1 class (object) + background

in_features = model.roi_heads.box_predictor.cls_score.in_features

model.roi_heads.box_predictor = torchvision.models.detection.faster_rcnn.FastRCNNPredictor(in_features, num_classes)

# Add a new mask predictor for our specific number of classes

in_features_mask = model.roi_heads.mask_predictor.conv5_mask.in_channels

hidden_layer = 256

model.roi_heads.mask_predictor = torchvision.models.detection.mask_rcnn.MaskRCNNPredictor(in_features_mask, hidden_layer, num_classes)

Training Loop:

# Load the dataset

# Assume CustomDataset is a Dataset class that loads images and their corresponding masks

train_dataset = CustomDataset(image_paths, annotations, transforms=...)

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=2, shuffle=True, num_workers=4)

# Training loop

device = torch.device('cuda') if torch.cuda.is_available() else torch.device('cpu')

model.to(device)

optimizer = torch.optim.SGD(model.parameters(), lr=0.005, momentum=0.9, weight_decay=0.0005)

num_epochs = 10

for epoch in range(num_epochs):

model.train()

for images, targets in train_loader:

images = [img.to(device) for img in images]

targets = [{k: v.to(device) for k, v in t.items()} for t in targets]

optimizer.zero_grad()

loss_dict = model(images, targets)

losses = sum(loss for loss in loss_dict.values())

losses.backward()

optimizer.step()

print(f"Epoch: {epoch}, Loss: {losses.item()}")

print("Training complete.")

Segmentation and detection are two essential tasks in computer vision, each serving different purposes and requiring distinct approaches. While object detection focuses on identifying and localizing objects within an image, segmentation provides a more detailed, pixel-level understanding of the image. Understanding the differences between these techniques and their applications can help in selecting the appropriate method for specific tasks in various domains. With the advent of powerful deep learning models and frameworks, implementing these techniques has become more accessible, driving advancements in fields ranging from autonomous driving to medical imaging.