Defining Rejection Criteria in an AI-Driven Inspection System

Published on: Jun 05, 2025

Written by: Content team, Intelgic

Defining Rejection Criteria in an AI-Driven Inspection System

In today’s dynamic and high-speed manufacturing landscape, AI-powered inspection systems have emerged as critical tools for maintaining consistent product quality and optimizing operational efficiency. Their ability to detect defects in real time, with high precision and scalability, offers significant advantages over traditional inspection methods. However, the effectiveness of these systems is fundamentally dependent on the establishment of clear, well-defined rejection criteria. Without precise thresholds and structured defect classifications, even the most sophisticated AI models can misalign with quality standards—resulting in false rejections, undetected flaws, and costly disruptions.

This article presents a comprehensive framework for defining and implementing rejection criteria in AI-driven inspection environments, integrating technical accuracy with real-world manufacturing considerations to ensure reliability, compliance, and quality assurance at scale.

Understand the Inspection Objective

A clear and thorough understanding of the inspection system's purpose is the foundation for setting effective rejection criteria in any AI-driven quality control process. Before defining thresholds or building defect detection logic, it’s essential to first identify what types of defects the system is expected to catch and how each should be prioritized. For instance, is the inspection focused on surface imperfections like scratches and color inconsistencies, or on more critical issues such as dimensional deviations, cracks, or missing components? Not all defects have the same impact on product performance or customer satisfaction. Some may pose safety or compliance risks and must be rejected without exception, while others might be cosmetic and acceptable depending on the product’s end use. By clearly classifying and prioritizing defect types at the outset, manufacturers ensure that the AI inspection system aligns with both internal quality standards and customer expectations.

Key Considerations:

-

Target Defect Types:

Define what the AI system should detect—e.g., surface scratches, color mismatches, dimensional inconsistencies, cracks, or missing components.

-

Defect Prioritization:

Determine the impact of each defect type:

-

Critical (e.g., structural crack): Requires immediate rejection.

-

Minor (e.g., light scratch): May be acceptable or conditionally passed.

-

-

Alignment with Quality Goals:

Ensure the inspection criteria reflect the product’s functional requirements, industry standards, and customer-specific quality expectations.

Define Defect Categories and Severity

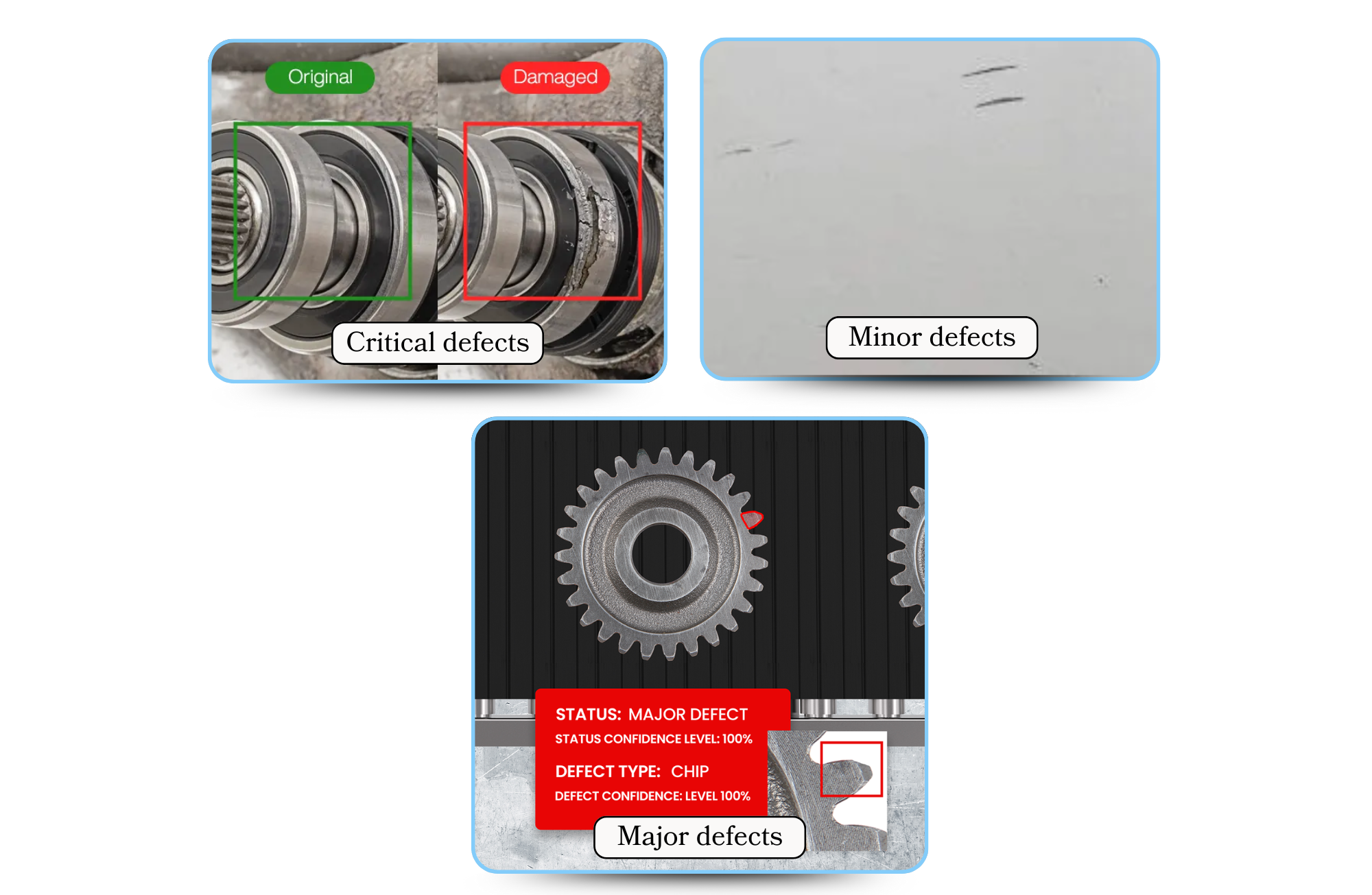

After identifying the relevant defect types, the next crucial step is to classify these defects based on their severity. This categorization serves as the foundation for determining how the inspection system should respond to different defect scenarios. Defects are typically grouped into three severity levels—critical, major, and minor—each reflecting the potential impact on product functionality, safety, or appearance.

-

Critical defects represent serious issues that compromise safety, regulatory compliance, or core functionality. Examples include structural cracks, missing components, or electrical faults. These defects necessitate immediate rejection, as they pose unacceptable risks to users or violate industry standards.

-

Major defects affect the usability, performance, or fit of the product without necessarily endangering safety. Issues such as incorrect dimensions or improper assembly fall into this category and also warrant rejection, though they may not be as urgent as critical flaws.

-

Minor defects are cosmetic in nature—such as superficial scratches or slight color variations—that do not interfere with product performance. These may be accepted under specific conditions or passed with a note for rework or monitoring.

Set Quantitative Thresholds

To enable AI-driven inspection systems to function with accuracy and consistency, qualitative defect assessments must be converted into precise, quantifiable parameters. This transformation allows the system to make objective decisions based on data rather than subjective judgment. One of the most effective ways to achieve this is by defining clear, measurable thresholds that correspond to the types and severity of defects identified during the planning phase.

For example:

-

Size-Based Thresholds: Set maximum allowable dimensions for defects, such as “Reject if scratch length exceeds 5mm.” This ensures consistent handling of surface imperfections.

-

Count-Based Limits: Specify acceptable defect frequency within a defined area, such as “Reject if more than 3 blemishes appear in a 10cm² zone.” This is particularly useful for spotting clusters of minor defects.

-

Color Tolerance: Utilize color deviation metrics like the Delta E (ΔE) value in Lab color space to maintain visual consistency. For instance, “Reject if ΔE exceeds 3” ensures color fidelity across production batches.

-

Geometric Deviation: Define acceptable variances in dimensions and alignments, such as “Accept if width is within ±0.2mm.” This is critical for parts requiring tight tolerances.

These quantifiable thresholds should be grounded in internal quality benchmarks, industry regulations (such as ISO standards), and any client-specific requirements. When properly defined and implemented, they allow the AI system to perform consistent, defensible inspections that support high-quality output and regulatory compliance.

Incorporate Statistical Confidence

AI-driven inspection systems not only detect defects but also provide a confidence score with each prediction—indicating how certain the model is about its decision. This confidence score plays a crucial role in enhancing the reliability and accuracy of automated inspections. By incorporating confidence thresholds into rejection criteria, manufacturers can avoid over-reliance on low-certainty predictions. For example, implementing a rule such as “Reject only if the model is more than 95% confident that a defect is critical” ensures that only highly probable issues are flagged for rejection. This helps reduce false positives (incorrectly rejecting good parts) and false negatives (failing to catch real defects). Over time, these confidence thresholds should be fine-tuned based on real-world data and inspection performance, allowing the system to evolve and make increasingly accurate decisions.certainty.

Include Contextual & Business Rules

In addition to technical defect criteria, it's essential to embed contextual and business considerations into the AI inspection framework. These rules ensure that quality control aligns not just with manufacturing standards, but also with customer expectations and operational efficiency goals.

-

Customer-Specific Tolerances:

Adjust defect thresholds and acceptance criteria based on individual client specifications or contractual agreements, recognizing that different customers may have varying quality expectations.

-

Production Speed vs. Quality Trade-offs:

Determine acceptable inspection strictness based on production demands—e.g., high-volume runs may allow for slightly relaxed criteria, while premium products require tighter controls.

-

End-Use Consideration:

Factor in the location and visibility of the defect—e.g., a flaw on the back of a hidden panel may be acceptable, while one on a visible surface is not.

-

Regulatory and Market Requirements:

Align inspection outcomes with relevant industry standards, certifications, or regional compliance needs.

-

Business Impact Awareness:

Evaluate the cost of rework or rejection against its potential effect on customer satisfaction, warranty claims, or brand reputation.

Iteratively Calibrate with Real Data

Even the most advanced AI inspection systems benefit from continuous refinement. To achieve optimal accuracy and reliability, it is essential to start with conservative settings and Confusion Matrix Analysis:

Regularly evaluate the system’s performance by analyzing true positives (correct defect detections), false positives (incorrect rejections), and false negatives (missed defects). This analysis provides insight into where the model excels and where it requires improvement.

Feedback Loop:

Establish a continuous feedback process where inspection outcomes and human reviews inform ongoing adjustments. This ensures that the AI model adapts to evolving production conditions and new defect patterns.

Threshold Re-evaluation:

Periodically review and fine-tune defect thresholds and confidence levels to minimize unnecessary rejections while maintaining stringent defect detection, striking a balance between sensitivity and specificity.

Data-Driven Improvement:

Use real-world inspection data to guide systematic enhancements, thereby increasing the system’s accuracy and fostering greater trust from operators and quality managers.

Setting rejection criteria in an AI-driven inspection system is a comprehensive process that blends technical precision with real-world production insights. It involves more than just programming detection rules—it requires a thoughtful framework that classifies defect types, translates qualitative observations into measurable thresholds, applies statistical confidence levels, and integrates human oversight for edge cases. When executed well, this structured approach transforms AI from a simple detection tool into a powerful enabler of consistent, data-driven quality assurance.

As manufacturing environments evolve, so must your rejection strategies. By continuously refining inspection parameters based on operational data, shifting customer expectations, and emerging technologies, organizations can ensure that their AI systems remain aligned with business objectives and capable of delivering high-impact results in ever-changing production landscapes.