What is Semantic Segmentation in Computer Vision

Published on: Aug 14, 2024

Written by: Admin

Semantic Segmentation in Computer Vision and Image Processing

Semantic segmentation classifies each pixel in an image into categories, offering detailed, pixel-level annotations for precise object localization and identification in computer vision.

Key Concepts in Semantic Segmentation

- Pixel-wise Classification: Each pixel in the image is assigned a class label, enabling the differentiation of various objects and regions within the image.

- Class Labels: The categories or classes assigned to pixels. For example, in a street scene, possible classes might include "road," "car," "pedestrian," "building," etc.

- Mask: A binary or multi-class image where each pixel value corresponds to a specific class label, effectively segmenting the image into different regions.

Applications of Semantic Segmentation

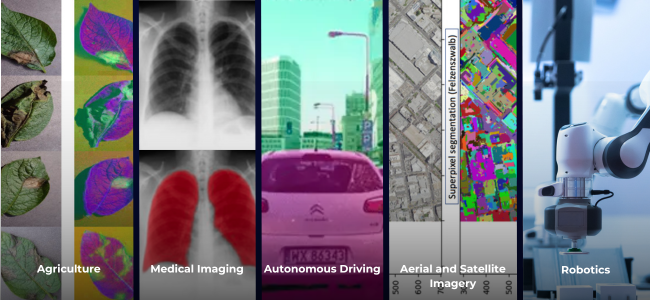

Semantic segmentation has a wide range of applications across various fields:

- Autonomous Driving: Identifying and classifying different elements of a road scene, such as lanes, vehicles, pedestrians, and traffic signs, to facilitate safe navigation.

- Medical Imaging: Segmenting anatomical structures, tissues, and abnormalities in medical scans (e.g., MRI, CT) to assist in diagnosis, treatment planning, and research.

- Aerial and Satellite Imagery: Analyzing land cover, urban planning, and environmental monitoring by classifying different regions in aerial or satellite images.

- Agriculture: Segmenting crops, fields, and other agricultural elements for precision farming, yield estimation, and plant health monitoring.

- Robotics: Enabling robots to understand and interact with their environment by identifying and categorizing different objects and surfaces.

Techniques and Models for Semantic Segmentation

Several techniques and models have been developed to achieve high accuracy in semantic segmentation. Some of the most widely used methods include:

- Fully Convolutional Networks (FCNs):

- FCNs replace the fully connected layers in traditional CNNs with convolutional layers, allowing the network to output a segmentation map instead of a single class label.

- The final layer of an FCN is usually a 1x1 convolution that produces the class probabilities for each pixel.

- U-Net:

- Originally designed for biomedical image segmentation, U-Net has become a popular architecture for various segmentation tasks.

- U-Net consists of an encoder-decoder structure with skip connections that combine feature maps from the encoder and decoder stages, improving localization accuracy.

- DeepLab:

- Developed by Google, the DeepLab series of models (DeepLabv1, DeepLabv2, DeepLabv3, DeepLabv3+) leverage atrous (dilated) convolutions and conditional random fields (CRFs) to capture multi-scale context and refine segment boundaries.

- DeepLabv3+ includes an encoder-decoder structure that enhances the model's ability to capture fine details.

- SegNet:

- SegNet uses an encoder-decoder architecture where the encoder captures the spatial features, and the decoder upsamples the features to produce the segmentation map.

- The unpooling layers in the decoder use the pooling indices from the encoder to improve segmentation accuracy.

Example: Implementing Semantic Segmentation with U-Net

Here’s a simplified example of how to implement semantic segmentation using the U-Net architecture with Python and TensorFlow:

- Setup and Data Preparation:

import tensorflow as tf

from tensorflow.keras.layers import Input, Conv2D, MaxPooling2D, UpSampling2D, concatenate

from tensorflow.keras.models import Model

# Define the U-Net model

def unet_model(input_size=(256, 256, 1)):

inputs = Input(input_size)

# Encoder

conv1 = Conv2D(64, 3, activation='relu', padding='same')(inputs)

conv1 = Conv2D(64, 3, activation='relu', padding='same')(conv1)

pool1 = MaxPooling2D(pool_size=(2, 2))(conv1)

conv2 = Conv2D(128, 3, activation='relu', padding='same')(pool1)

conv2 = Conv2D(128, 3, activation='relu', padding='same')(conv2)

pool2 = MaxPooling2D(pool_size=(2, 2))(conv2)

# Decoder

up1 = UpSampling2D(size=(2, 2))(conv2)

merge1 = concatenate([conv1, up1], axis=3)

conv3 = Conv2D(64, 3, activation='relu', padding='same')(merge1)

conv3 = Conv2D(64, 3, activation='relu', padding='same')(conv3)

outputs = Conv2D(1, 1, activation='sigmoid')(conv3)

model = Model(inputs=inputs, outputs=outputs)

return model

# Compile the model

model = unet_model()

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

# Summary of the model

model.summary()

- Training the Model:

# Assume X_train and Y_train are the training images and corresponding masks

# Train the model

model.fit(X_train, Y_train, batch_size=8, epochs=10, validation_split=0.1)

- Evaluating and Using the Model:

# Evaluate the model on the test set

# Assume X_test and Y_test are the test images and corresponding masks

loss, accuracy = model.evaluate(X_test, Y_test)

print(f"Test Loss: {loss}, Test Accuracy: {accuracy}")

# Make predictions

predictions = model.predict(X_test)

Semantic segmentation is a powerful technique in computer vision and image processing, providing detailed pixel-level classification of images. It plays a crucial role in various applications, from autonomous driving to medical imaging, by enabling precise localization and identification of objects. Understanding the key concepts, techniques, and models used in semantic segmentation can help in developing effective solutions for complex visual tasks. With the availability of advanced architectures like FCNs, U-Net, DeepLab, and SegNet, implementing semantic segmentation has become more accessible and efficient, driving innovation across different fields.