Objective

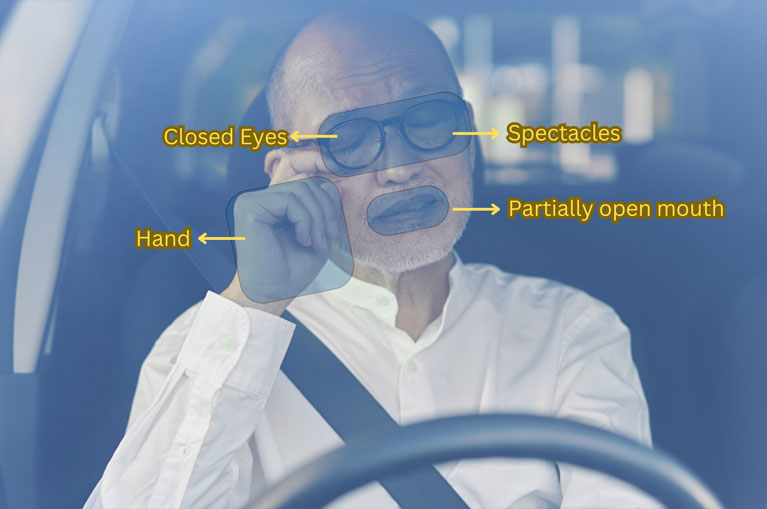

The objective of the project was to build an AI based driver’s activity monitoring system that would classify and generate alerts for predefined driver’s activities. The new system would be integrated with the existing camera system to source the videos. The objective was to choose and train an AI model with client’s data to generate annotation level classification as accurate as possible and then develop an algorithm for each classification for the activity detection. Our goal was to achieve 95% accuracy on the algorithm that would work on top of the AI output.